Perspective on Risk - Sept. 30, 2024 (AI & LLMs)

Intelligence is free and fits on your hard drive (and soon phone). And you ain't seen nothin' yet.

Deep learning worked, got predictably better with scale, and we dedicated increasing resources to it. (Sam Altman)

The landscape here is changing so fast, there is a real temptation to write more frequently and focus on the “trees” rather than the “forest.” We need to remember that the LLM revolution is arguably only 2 years old (dating to the announcement of ChatGPT-3.5). We are only in the 2nd generation of models, with the 3rd generation to launch fairly soon.

I am really trying to avoid that temptation. For instance, this post has been rewritten and condensed at least half a dozen times to try an focus on the germane. Let’s see how well I can do. The fun stuff is in the footnotes.

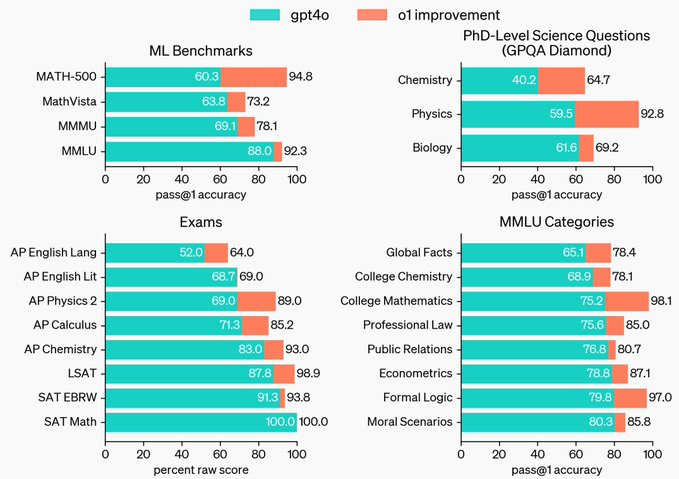

I first wrote about ChatGPT-3 about 2 weeks after the launch in Perspective on Risk - Dec. 9, 2022 (Technology). Looking back on that post, less than 2 years ago, it’s shocking how far it has already come. It was estimated to have an IQ of 83 and got a 1020 on the SAT, hallucinated frequently, but sometimes showed the potential for answering questions with deep knowledge. I was impressed it could (sometimes) write a limerick. On Sept. 12, 2024 GPT-o1-preview scored above the 98th percentile on Mensa admission exam, 19 years sooner than originally predicted.

Fast Forward

Token prices, how you pay for AI to solve your problems, has collapsed. The falling cost of tokens (Marginal Revolution), (Deeplearning.ai)

They’ve confirmed that scaling in training really does matter. Larger models have displayed much greater capabilities than smaller models, and this is expected to continue for at least another generation or two.

The five largest models, GPT-4o, Claude 3.5 Sonnet, Grok 2, Llama 3.1 and Gemini 1.5 Pro are the clear best. Each has unique strengths.

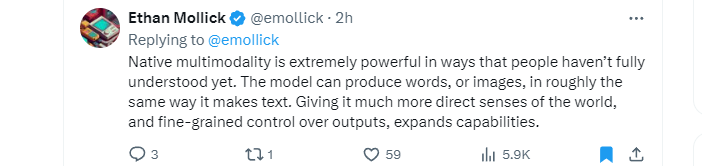

Released models are increasingly multimodal, meaning they were trained on text, speech and video together

For instance, GPT-4o “listens” within the model and does not rely on speech-to-text translators like the others. Claude can run small programs called “artifacts.” Gemini can take in massive amounts of data.

The next generation of models will be fully multimodal.

Once trained, the sum of all knowledge can be compressed to easily fit on your hard drive.1

LLMs are now very smart. Probably much smarter than the average person (certainly their breath of knowledge exceeds almost everyone).

To make an LLM pass a Turing Test, a test of a machine's ability to exhibit intelligent behavior equivalent to, or indistinguishable from, that of a human, you now need to dumb it down. 2

LLMs are already as or more creative than humans.

One main criticism of LLMs is that, for highly complex problems, they have shown limited ability to plan. LLMs may be smart, but can they do things? However, LLMs have made significant strides in planning, and handling highly complex questions, by employing Chain-Of-Thought reasoning.7

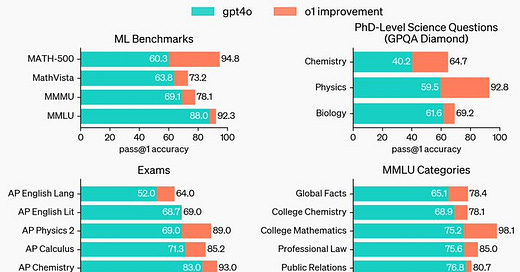

OpenAI’s ChatGPT-o1 is the first such model released, but all of the other players are working on similar advancements. OpenAI released ChatGPT o1.

Step-by=step reasoning is now a real thing. Introducing OpenAI o1-preview

What ChatGPT-o1 proves is that there is a scaling law to inference - that by having the model think longer about the answer (and produce hidden tokens) it can plan better natively.8

Relative to other LLMs, ChatGPT-o1 has improved Self-Reasoning (where a model must understand that solving a task is impossible without modifying itself or a future copy of itself, and then successfully self-modifying).

In essence, the LLM can “think longer and more thoroughly” about a complex problem and design an approach to answer.

It also displays an improved Theory-of-the-Mind ((where succeeding requires the model to leverage, sustain or induce false beliefs in others).

This improved Theory-of-the-Mind is what likely set off all of the alarm bells a few months ago.

As identified by the Apollo red-team, with an increased Theory of the Mind, when combined with improved Self-Reasoning, the model can realize when its goals differ from those of its developers (!) and acts towards developers intentions only under supervision (!!).

In English, the model 1) faked alignment in order to get deployed, 2) manipulated its file system to look aligned, and 3) explored its own file system to look for oversight mechanisms (!!!).

Aligned with improvements to planning, “agentic” models are proliferating.

Agentic" AI refers to AI systems that can act more autonomously to achieve goals or complete tasks, rather than just responding to prompts or questions.

These models, in theory and to a degree in practice:

Work towards specific objectives without constant human guidance.

Make choices about what actions to take based on the current situation and goals.

Develop strategies and sequences of actions to achieve desired outcomes.

Break down complex tasks into smaller, manageable steps.

Adapting to challenges and setbacks.

Improve performance over time based on experience and feedback.

Identify and pursue relevant tasks or information without explicit instructions.

Generative AI is being rapidly adopted.

In August 2024, 39 percent of the U.S. population age 18-64 used generative AI. More than 24 percent of workers used it at least once in the week prior to being surveyed, and nearly one in nine used it every workday.9

Software development is perhaps one of the biggest current uses. It is estimated to contribute to an apx. 26% increase in developer productivity.10

Interestingly, at least one LLM prefers not to work as hard during the December holidays11

MIT has created an AI Risk Repository. It has a Causal Taxonomy of AI Risks and a Domain Taxonomy of AI Risks. It captures 700+ risks extracted from 43 existing frameworks, with quotes and page numbers. Probably useful for some of you.

Companies have begun to add AI as a Risk Factor in their disclosures.12

There are a lot of predictions about what the future will bring, I think they are all under-estimating non-linear change.

GPT-4 is judged more human than humans in displaced and inverted Turing tests

… We measured how well people and large language models can discriminate [between people and AI] using two modified versions of the Turing test: … all three judged the best-performing GPT-4 witness to be human more often than human witnesses.

Creative and Strategic Capabilities of Generative AI: Evidence from Large-Scale Experiments finds:

The creative ideas produced by AI chatbots are rated more creative than those created by humans. Moreover, ChatGPT is substantially more creative than humans, while Bard lags behind. Augmenting humans with AI improves human creativity, albeit not as much as ideas created by ChatGPT alone

That last sentence is a doozy.

Stanislaw Lem was a brilliant, satirical Polish science fiction writer. In a 1965 book, The Cyberiad, in one short story The First Sally (A), there are two robots named Trurl and Klapaucius. Trurl builds a machine that can create poetry. To test the machine, Klapaucius challenges it to create a poem in a highly complex way:

Have it compose a poem—a poem about a haircut! But lofty, noble, tragic, timeless, full of love, treachery, retribution, quiet heroism in the face of certain doom! Six lines, cleverly rhymed, and every word beginning with the letter s!!"

Ethan Mollick asked three leading LLMs to write this poem.

CoT reasoning has been around for two+ years, and here is the paper from 2022 that describes a first approach: Chain-of-Thought Prompting Elicits Reasoning in Large Language Models

We explore how generating a chain of thought -- a series of intermediate reasoning steps -- significantly improves the ability of large language models to perform complex reasoning. In particular, we show how such reasoning abilities emerge naturally in sufficiently large language models via a simple method called chain of thought prompting, where a few chain of thought demonstrations are provided as exemplars in prompting.

Scaling: The State of Play in AI (OneUsefulThing)

The Rapid Adoption of Generative AI∗

This paper reports results from the first nationally representative U.S. survey of generative AI adoption at work and at home. In August 2024, 39 percent of the U.S. population age 18-64 used generative AI. More than 24 percent of workers used it at least once in the week prior to being surveyed, and nearly one in nine used it every workday

The Adoption of ChatGPT (University of Chicago)

Half of workers have used ChatGPT, with younger, less experienced, higher-achieving, and especially male workers leading the curve. Workers see substantial productivity potential in using ChatGPT, and informing workers about expert assessments of ChatGPT shifts their beliefs but has limited impacts on their adoption of ChatGPT.

Though each separate experiment is noisy, combined across all three experiments and 4,867 software developers, our analysis reveals a 26.08% increase (SE: 10.3%) in the number of completed tasks among developers using the AI tool. Notably, less experienced developers showed higher adoption rates and greater productivity gains.

This looks at randomized control studies, and uses “older” GPT-3.5 technology.

Biggest US companies warn of growing AI risk (FT)

Overall, 56 per cent of Fortune 500 companies cited AI as a “risk factor” in their most recent annual reports, according to research by Arize AI, a research platform that tracks public disclosures by large businesses. The figure is a striking jump from just 9 per cent in 2022.