Perspective on Risk - May 16, 2023 (The Next Technology Post)

I need to send this out now, as the pace of change is so rapid that I’ve rewritten it 3x already. It may be a bit of a hot mess. It keeps getting deferred with all the damn banking posts.

The main point is “Holy Sh!t” these LLMs are incredibly powerful. This, frankly, is way more important than the who did what to whom with what instrument of bank failures.

Since I last wrote about technology in the Perspective, things have continued to move very quickly.

Most importantly, OpenAI has released ChatGPT-4

ChatGPT-4 is incredibly more powerful than ChatGPT. Think Ford Focus vs Ford Mustang.

ChatGPT 4.0 has begun to integrate ‘plug-ins’ that expand its capabilities, such as the Wolfram Alpha discussed previously.

Numerous other general-purpose large-language models have been released (Claude, Bard, LLaMDA)

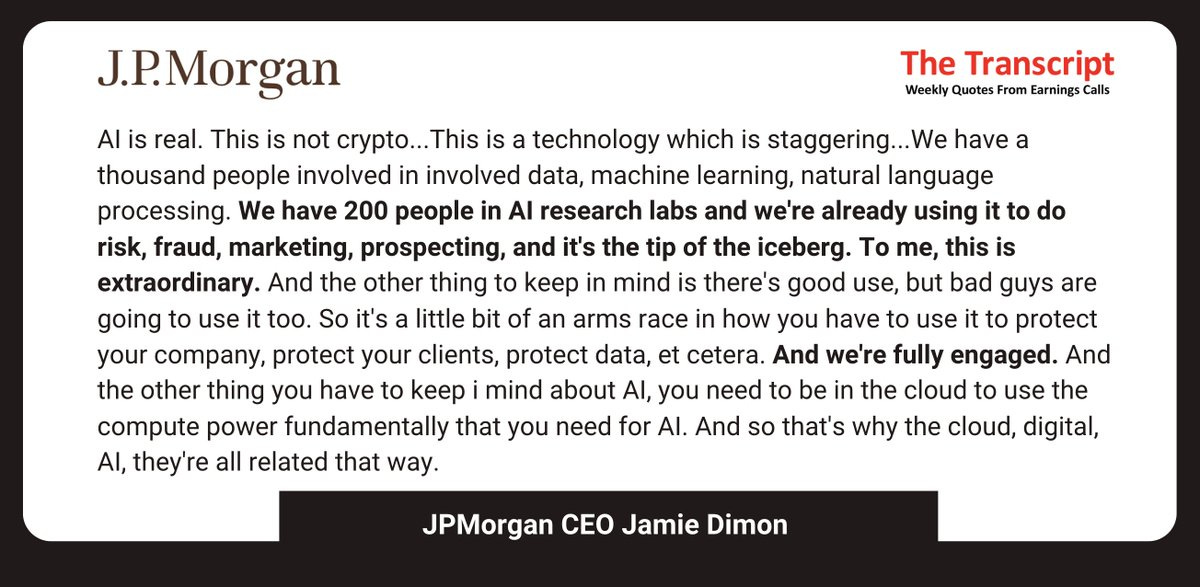

Domain-specific LLMs are being rolled out, including BloombergGPT in finance.

ChatGPT is being tailored and integrated into other applications.

For instance, Elicit is designed to help you perform research by identifying the relevant papers to answer a question (I use this now).

A memo from Google leaked that shows how fast things are moving.

The Google memo makes a number of other points:

[Google has] No Moat And neither does OpenAI; Directly Competing With Open Source Is a Losing Proposition

Large models aren’t more capable in the long run if we can iterate faster on small models

Data quality scales better than data size

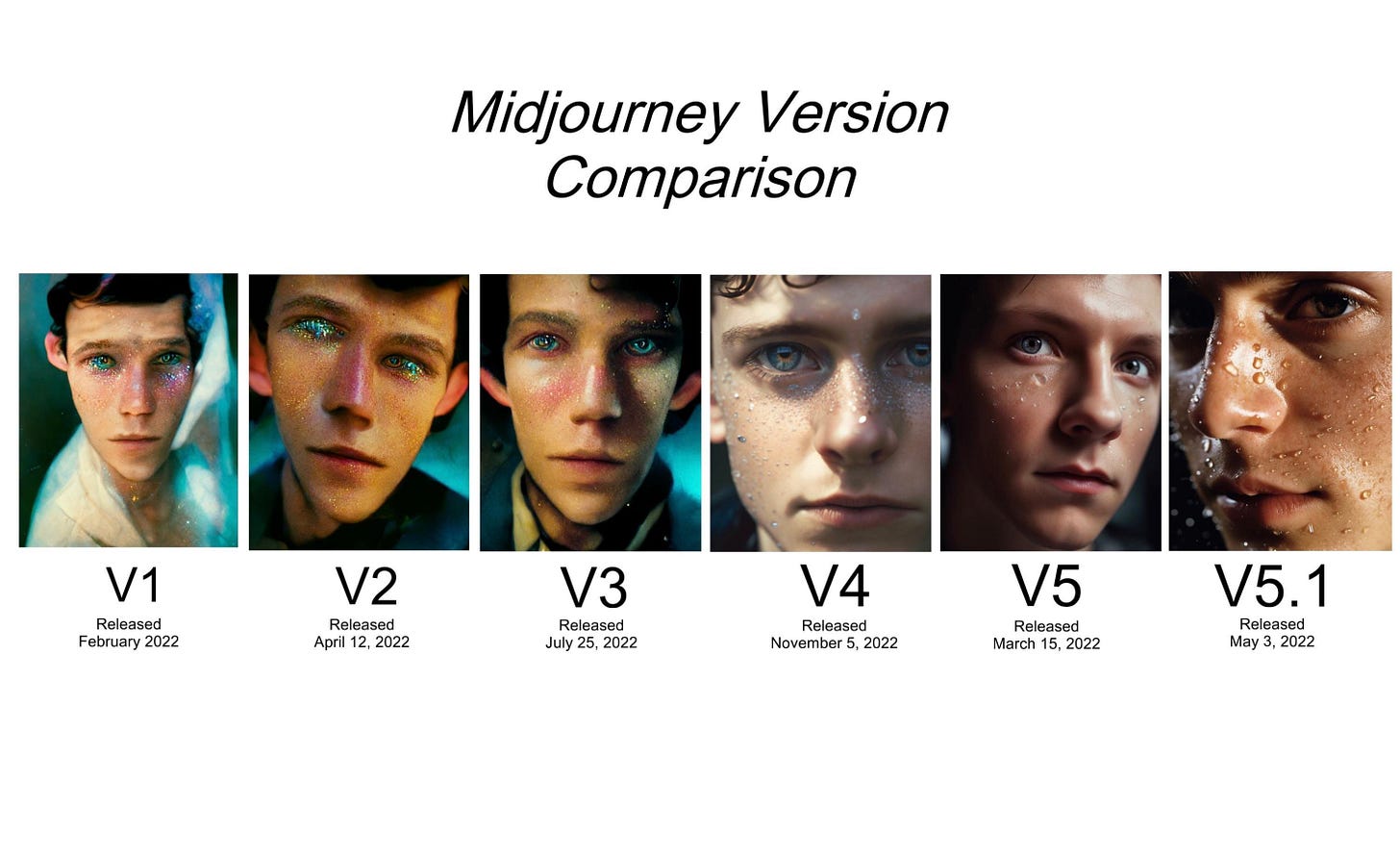

Here Is A Simple Way To See The Improvement

Feb 2022 to May 2023.

How Much Better Will LLMs Get?

This is up for debate. OpenAI’s CEO Says the Age of Giant AI Models Is Already Over

OpenAI says its estimates suggest diminishing returns on scaling up model size. Altman said there are also physical limits to how many data centers the company can build and how quickly it can build them.

That is, where we have spoken in the past about exponential growth of certain technologies, OpenAI suggests that the improvement of LLM models scales sub-linearly, meaning it is not just about adding more parameters.

However, in A Survey of Large Language Models the authors note:

Interestingly, when the parameter scale exceeds a certain level, these enlarged language models not only achieve a significant performance improvement but also show some special abilities that are not present in small-scale language models. To discriminate the difference in parameter scale, the research community has coined the term large language models (LLM) for the PLMs of significant size.

For those of a technical bent, the author of First-principles on AI scaling attempts to derive a sophisticated ‘inside view’1 examining the elements that would affect the scaling of LLMs, taking both the quantities of data and compute to their possible limits.

He concludes that

There is no apparent barrier to LLMs continuing to improve substantially from where they are now.

While it’s feasible to make datasets bigger, we might hit a barrier trying to make them more than 10x larger than they are now, particularly if data quality turns out to be important.

You can probably scale up compute by a factor of 100 and it would still “only” cost a few hundred million dollars to train a model. But to scale a LLM to maximum performance would cost much more—with current technology, more than the GDP of the entire planet.

How far things get scaled depends on how useful LLMs are.

How To Use LLMs (and specifically GPT-4)

This section will again refer quite a bit to the observations of Wharton Prof. Ethan Mollick. I highly recommend subscribing to his substack.

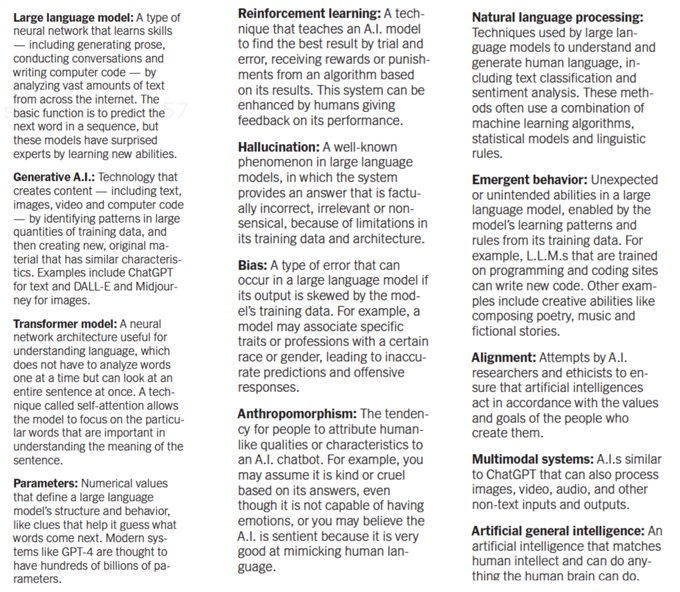

LLM Glossary

GPT-4

You can access GPT-4 in two ways: using Bing in ‘creative mode’ or paying $20 per month through ChatGPT+.

GPT-4, especially with some plug-ins, is surprising:

it is capable of learning from failure & trying again until it gets something right, and is “relentless.

In this example “it has trouble conducting an analysis, so it spontaneously alters approach in response to errors until it fixes the problem

It does this eleven times, and ends up figuring it out. This just takes a few seconds, but would have been frustrating for us”

Using the Code Interpreter plug-in, Prof. Mollick

Uploaded an Excel spreadsheet, without giving any additional context.

With the prompt “can you conduct whatever visualizations and descriptive analysis you think would help me understand the data?” GPT-4

GPT-4 figured out what was contained in the spreadsheet (VC investments)

Created a series of relevant bar-charts, histograms and scatter plots to illustrate the data

With some additional prompting (“look for patterns”) it

conducted regressions with diagnostics and performed outlier analysis, and

performed a sensitivity analysis on the variables

And then summarized the analysis in plain english.

ChatGPT can now directly output speech using a plug-in

The key here is that there are now ways to directly overcome the weaknesses inherent in LLMs.

Things GPT-4 can do

Here are just a few things I have seen already:

BeMyEyes is an app that will act as 'eyes' for visually impaired people. It will will answer any question about an image and provide instantaneous visual assistance in real-time

Morgan Stanley has a GPT-4-enabled internal chatbot that searches through extensive PDF format, making it easier for advisors to find answers to specific questions. The chatbot is trained on Morgan Stanley’s internal content repository.

Linked-in has introduced a GPT-4 based tool to help subscribers improve their profiles.

Pi is an emotional support chatbot folk’s are using.

It can easily code up websites. In fact, it can write code in many different computer languages, and also explain how to implement the code.

In fact, here it took a picture of a hand-drawn note and build a website

It can hire humans to do things it can’t!

Here it used TaskRabbit to hire someone to solve a Captcha problem it couldn’t solve.

The NY Times has an interesting list of small uses of Chat-GPT. Some of the things I found most interesting were:

Play Devil’s Advocate - “I use ChatGPT to simulate arguments in favor of keeping the existing tool,” he said. “So that I can anticipate potential counterarguments.”

Cope with ADHD - “With ADHD, getting started and getting an outline together is the hardest part,” Ms. Payne said. “Once that’s done, it’s a lot easier to let the work flow.”

Appeal an insurance denial - Dr. Ryckman uses ChatGPT to write the notes he needs to send insurers when they’ve refused to pay for radiation treatment for one of his cancer patients.

“What used to take me around a half-hour to write now takes one minute,” he said.

Write complex Excel formulas

Get help when english is your second language - If I can’t think of a particular word, for example, it is super easy to just describe the word, and GPT almost always knows what I mean, even if the description is really bad.”

Male a Spotify playlist - Mr. Soma wanted “on-demand, vibes-based” playlists. All Sum 41 songs, with none of the slow ones, for example. So he hooked up GPT-3 to Spotify.

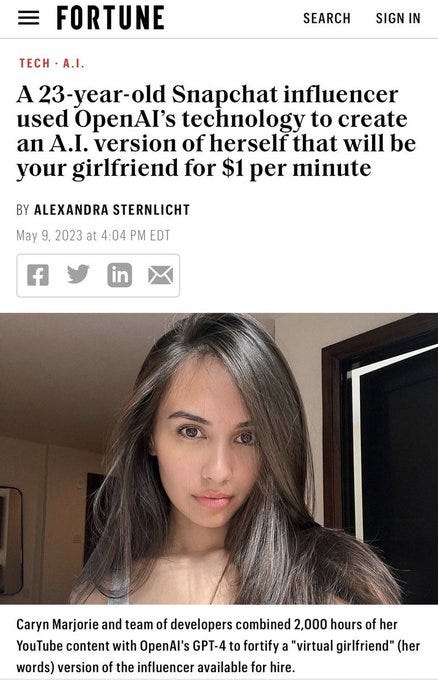

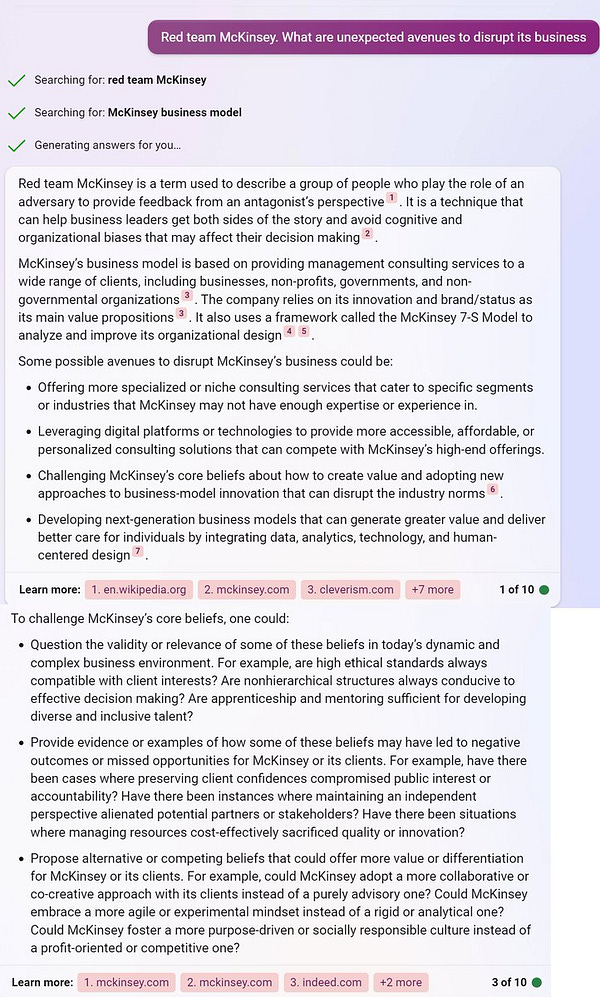

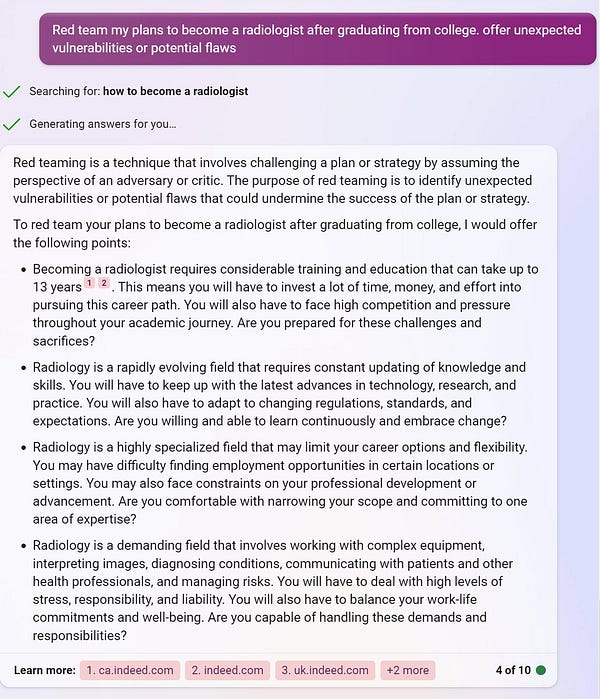

Useful for red-teaming

Using Large Language Models

Ethan Mollick has prepared guides on how to best use LLMs

If you want to use these in your personal work, these are invaluable.

How to use AI to do practical stuff: A new guide

This is about picking the right generative AI model for the task at hand

A guide to prompting AI (for what it is worth)

Give the system context and constraints

For slightly more advanced prompts, think about what you are doing as programming in English prose.

There are some phrases that seem to work universally across LLMs to provide better or different results, again, by changing the context of the answer.

Iterate - don’t expect to write the perfect prompt - have a dialogue with the LLM to get the answers you are looking for - use it as a thinking companion

How to... use AI to unstick yourself

How to unstick your writing

How to take an idea and act on it (unstick action)

How to ask for help

This video gives a some great examples of how to write prompts, and the power and creativity of Bing and GPT-4..

Academic study on how a generative AI implementation improved call-center productivity

Erik Brynjolfsson, together with MIT economists Danielle Li and Lindsey R. Raymond recently released Generative AI at Work. Erik is notable for having published back in 2017 an early prediction about AI What can machine learning do? Workforce implications. This later paper is now quite outdated.

In this earlier paper he wrote:

We are now at the beginning of an even larger and more rapid transformation due to recent advances in machine learning (ML), which is capable of accelerating the pace of automation itself. However, although it is clear that ML is a “general purpose technology,” like the steam engine and electricity, which spawns a plethora of additional innovations and capabilities (2), there is no widely shared agreement on the tasks where ML systems excel, and thus little agreement on the specific expected impacts on the workforce and on the economy more broadly

In the new paper the authors produce one of the first rigorous investigations of generative AI on the specific productivity in customer support.

We study the staggered introduction of a generative AI-based conversational assistant using data from 5,179 customer support agents. Access to the tool increases productivity, as measured by issues resolved per hour, by 14 percent on average, with the greatest impact on novice and low-skilled workers, and minimal impact on experienced and highly skilled workers. We provide suggestive evidence that the AI model disseminates the potentially tacit knowledge of more able workers and helps newer workers move down the experience curve. In addition, we show that AI assistance improves customer sentiment, reduces requests for managerial intervention, and improves employee retention.

Basically, the paper showed that it accelerated the learning curve for less experienced employees.

An AI/LLM Improves Programmer/Developer Performance Significantly

The Impact of AI on Developer Productivity: Evidence from GitHub Copilot

The performance difference between treated and control groups are statistically and practically significant: the treated group completed the task 55.8% faster (95% confidence interval: 21-89%). Developers with less programming experience, older programmers, and those who program more hours per day benefited the most. These heterogeneous effects point towards promise for AI-pair programmers in support of expanding access to careers in software development.

Conditioning on completing the task, the average completion time from the treated group is 71.17 minutes and 160.89 minutes for the control group.

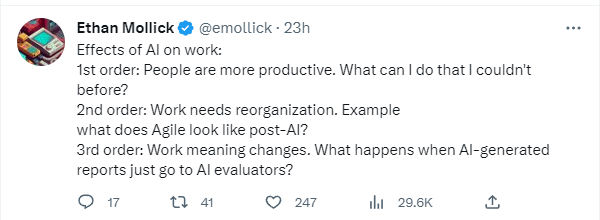

How An Economist Uses LLMs

A User's Guide to GPT and LLMs for Economics Research (Youtube)

Here is An Example of What I’ve Done

For this example, I’ve used Google’s Bard.

This took less than 2 minutes total.

Another Example

I saw a paper in Nature titled Non-Abelian braiding of graph vertices in a superconducting processor. Now, I’ve seen some of those words before. Here is part of the abstract:

In two spatial dimensions, an intriguing possibility exists: braiding of non-Abelian anyons causes rotations in a space of topologically degenerate wavefunctions4,5,6,7,8. Hence, it can change the observables of the system without violating the principle of indistinguishability. Despite the well-developed mathematical description of non-Abelian anyons and numerous theoretical proposals9,10,11,12,13,14,15,16,17,18,19,20,21,22, the experimental observation of their exchange statistics has remained elusive for decades. Controllable many-body quantum states generated on quantum processors offer another path for exploring these fundamental phenomena. Whereas efforts on conventional solid-state platforms typically involve Hamiltonian dynamics of quasiparticles, superconducting quantum processors allow for directly manipulating the many-body wavefunction by means of unitary gates. Building on predictions that stabilizer codes can host projective non-Abelian Ising anyons9,10, we implement a generalized stabilizer code and unitary protocol23 to create and braid them. This allows us to experimentally verify the fusion rules of the anyons and braid them to realize their statistics.

Gotta admit, no idea what’s going on. So I asked Bard, and then I asked it to simplify the answer even further until I had some understanding of what the hell they are talking about.

Market Reaction to Release of AI

What are the effects of recent advances in Generative AI on the value of firms?

Using Artificial Minus Human portfolios that are long firms with higher exposures and short firms with lower exposures, we show that higher exposure firms earned excess returns that are 0.4% higher on a daily basis than returns of firms with lower exposures following the release of ChatGPT.

We find that the effect of the release of ChatGPT on firm values was large, driving a difference in firm returns of approximately .4% daily, translating to over 100% on an annualized basis. These differences were realized both within and across industries, and display wide variation which is correlated with firm characteristics such as organizational capital or gross profitability.

What Are The Implications

When and How Artificial Intelligence Augments Employee Creativity

From When and How Artificial Intelligence Augments Employee Creativity

Drawing on research on AI-human collaboration, job design, and employee creativity, we examine AI assistance in the form of a sequential division of labor within organizations: in a task, AI handles the initial portion which is well-codified and repetitive, and employees focus on the subsequent portion involving higher-level problem-solving.

First, we provide causal evidence from a field experiment conducted at a telemarketing company. We find that AI assistance in generating sales leads, on average, increases employees’ creativity in answering customers’ questions during subsequent sales persuasion. Enhanced creativity leads to increased sales. However, this effect is much more pronounced for higher-skilled employees.

Next, we conducted a qualitative study using semi-structured interviews with the employees. We found that AI assistance changes job design by intensifying employees’ interactions with more serious customers.

This change enables higher-skilled employees to generate innovative scripts and develop positive emotions at work, which are conducive to creativity.

By contrast, with AI assistance, lower-skilled employees make limited improvements to scripts and experience negative emotions at work.

We conclude that employees can achieve AI-augmented creativity, but this desirable outcome is skill-biased by favoring experts with greater job skills.

Occupational Alignment with LLM Capabilities

In GPTs are GPTs: An Early Look at the Labor Market Impact Potential of Large Language Models the authors:

investigate the potential implications of large language models (LLMs), such as Generative Pretrained Transformers (GPTs), on the U.S. labor market, focusing on the increased capabilities arising from LLM-powered software compared to LLMs on their own

Our findings reveal that around 80% of the U.S. workforce could have at least 10% of their work tasks affected by the introduction of LLMs, while approximately 19% of workers may see at least 50% of their tasks impacted.

higher-income jobs potentially facing greater exposure to LLM capabilities and LLM-powered software.

with access to an LLM, about 15% of all worker tasks in the US could be completed significantly faster at the same level of quality. When incorporating software and tooling built on top of LLMs, this share increases to between 47 and 56% of all tasks. This finding implies that LLM-powered software will have a substantial effect on scaling the economic impacts of the underlying models.

In How will Language Modelers like ChatGPT Affect Occupations and Industries? Felton et. al.

present a methodology to systematically assess the extent to which occupations, industries and geographies are exposed to advances in AI language modeling capabilities.

We find that the top occupations exposed to language modeling include telemarketers and a variety of post-secondary teachers such as English language and literature, foreign language and literature, and history teachers.

We find the top industries exposed to advances in language modeling are legal services and securities, commodities, and investments.

In Occupational Heterogeneity in Exposure to Generative AI the authors reach some similar conclusions to the first paper while building off the Felton framework:

[They] use a recently developed methodology to systematically assess which occupations are most exposed to advances in AI language modeling and image generation capabilities. We then characterize the profile of occupations that are more or less exposed based on characteristics of the occupation, suggesting that highly-educated, highly-paid, white-collar occupations may be most exposed to generative AI, and consider demographic variation in who will be most exposed to advances in generative AI.

The binned scatterplots suggest that there is a strong positive correlation between the two generative AI scores and median salaries, the required level of education, and the presence of creative abilities within an occupation. This is consistent with the idea that highly-skilled and highly-educated white-collar occupations may be most likely to be exposed to the advances in generative AI technologies.

Further, any heterogeneity in which occupations are most likely to be affected by generative AI technologies will also have implications for labor market outcomes across demographic groups, as the distribution of individuals across occupations is not equal across race or gender.

ChatGPT and Other LLMs Are Already Having An Effect

Chegg Q1 2023 Earnings Call Transcript

As we shared with you during our last call, we believe that generative AI and large language models are going to affect society and business, both positively and negatively. At a faster pace than people are used to. Education is already being impacted. And over time, we believe that this will advantage Chegg.

In the first part of the year, we saw no noticeable impact from ChatGPT on our new account growth, and we were meeting expectations on new sign ups. However, since March, we saw a significant spike in student interest in ChatGPT. We now believe it's having an impact on our new customer growth.

The Writers Guild of America OOriginal Strike Demands were:

Regulate use of artificial intelligence on MBAcovered projects: AI can’t write or rewrite literary material; can’t be used as source material; and MBA-covered material can’t be used to train AI.

Variety reports the position has been modified during negotiation:

WGA Would Allow Artificial Intelligence in Scriptwriting, as Long as Writers Maintain Credit

The Writers Guild of America has proposed allowing artificial intelligence to write scripts, as long as it does not affect writers’ credits or residuals.

The guild had previously indicated that it would propose regulating the use of AI in the writing process, which has recently surfaced as a concern for writers who fear losing out on jobs.

But contrary to some expectations, the guild is not proposing an outright ban on the use of AI technology.

Instead, the proposal would allow a writer to use ChatGPT to help write a script without having to share writing credit or divide residuals.

Bill Gates

Secret Cyborgs: The Present Disruption in Three Papers

Semiconductors

I Saw the Face of God in a Semiconductor Factory

What Are The Implications

Academic

The Diffusion of Disruptive Technologies

We identify novel technologies using textual analysis of patents, job postings, and earnings calls. Our approach enables us to identify and document the diffusion of 29 disruptive technologies across firms and labor markets in the U.S. Five stylized facts emerge from our data. First, the locations where technologies are developed that later disrupt businesses are geographically highly concentrated, even more so than overall patenting. Second, as the technologies mature and the number of new jobs related to them grows, they gradually spread geographically. While initial hiring is concentrated in high-skilled jobs, over time the mean skill level in new positions associated with the technologies declines, broadening the types of jobs that adopt a given technology. At the same time, the geographic diffusion of low-skilled positions is significantly faster than higher-skilled ones, so that the locations where initial discoveries were made retain their leading positions among high-paying positions for decades. Finally, these pioneer locations are more likely to arise in areas with universities and high skilled labor pools.

Fear of LLMs,

Writers Strike

Maybe Just Hire A Parrot

See Kahneman Thinking Fast, Thinking Slow