Perspective on Risk - June 2, 2023

Mo, Tell Us What You Really Think; Anatomy of an AIG payday; Do LLMs Make Biased Decisions? Validating AI Models; How To Craft Prompts For LLMs; AI Risks; Another Stories.Finance

Mohammed, Tell Us What You Really Think

Why the Fed Is Hard to Predict (El-Erian)

The US Federal Reserve is adrift, and it has only itself to blame. Regardless of whether its policy-setting committee announces another interest-rate hike in June, its top priority now should be to address the structural weaknesses that led it astray in the first place.

It is a central bank that … lacks a solid strategic foundation.

The real reason that we have so much policy confusion is that the Fed is relying on an inappropriate policy framework and an outdated inflation target. Both of these problems have been compounded by a raft of errors (in analysis, forecasting, communication, policy measures, regulation, and supervision) over the past two years.

they now find themselves in a quagmire of second-best policymaking, where every option implies a high risk of collateral damage and unintended consequences.

Anatomy of an AIG payday

FT Alphaville took a look at Peter Zaffino’s pay package. Anatomy of an AIG payday

Zaffino truly must possess some truly unique financial capabilities to convince the company that already pays him >$20mn a year to throw in an extra $50mn to convince him to continue doing his job.

It in particular highlights that AIG’s peer group for measuring compensation is significantly different that that for measuring relative shareholder returns.

Alphaville reporters plan to compare themselves with Taylor Swift: after all, we’re a similar size (most of us are taller), and are also entertainers who have mastered the English language.

AIG’s market capitalization at May 31, 2023 is $38.9 billion. It’s market capitalization was $40 billion when he was appointed CEO in March 2021. The S&P, unadjusted for dividends, is up in the time period.

LOL. Disgusting.

Addendum: Doug Dachille has an interesting observation over on LinkedIn:

For reference: AIG Announces Strategic Partnerships with BlackRock to Manage Certain AIG and Life & Retirement Assets

Where’s That Recession You Promised?

Patience young Jedi.

Real Estate

Commercial

Nice piece from the OFR on Five Office Sector Metrics to Watch

Likelihood of a Recession

The Size of the Sublease Market

Actual Office Space Occupancy (Measured by Card Key Swipes)

Real Estate Investment Trust (REIT) Performance

Work-from-Home (WFH) Trends

West coast home prices are falling

Do LLMs Make Biased Decisions?

Peter Hancock, the former CEO of AIG, used to say that if you didn’t have a model that you can review as code, you are relying on a mental model that cannot be challenged. You combine that thought with the observation from [] than humans are subject to all sorts of behavioral biases, and you will understand why I always preferred a formal model to human judgement.

But a fundamental question becomes whether the models we construct and train are free of the behavioral biases we exhibit. Certainly if we train models on data based on past human judgment these biases will seep in.

Several researchers have written A Manager and an AI Walk into a Bar: Does ChatGPT Make Biased Decisions Like We Do? that examiners whether GPT-3.5, which until its recent replacement with GPT-4, was the engine behind ChatGPT, exhibits human biases. If ever there was a paper written to play to my interests, one combining AI and behavioral biases is certainly the one!

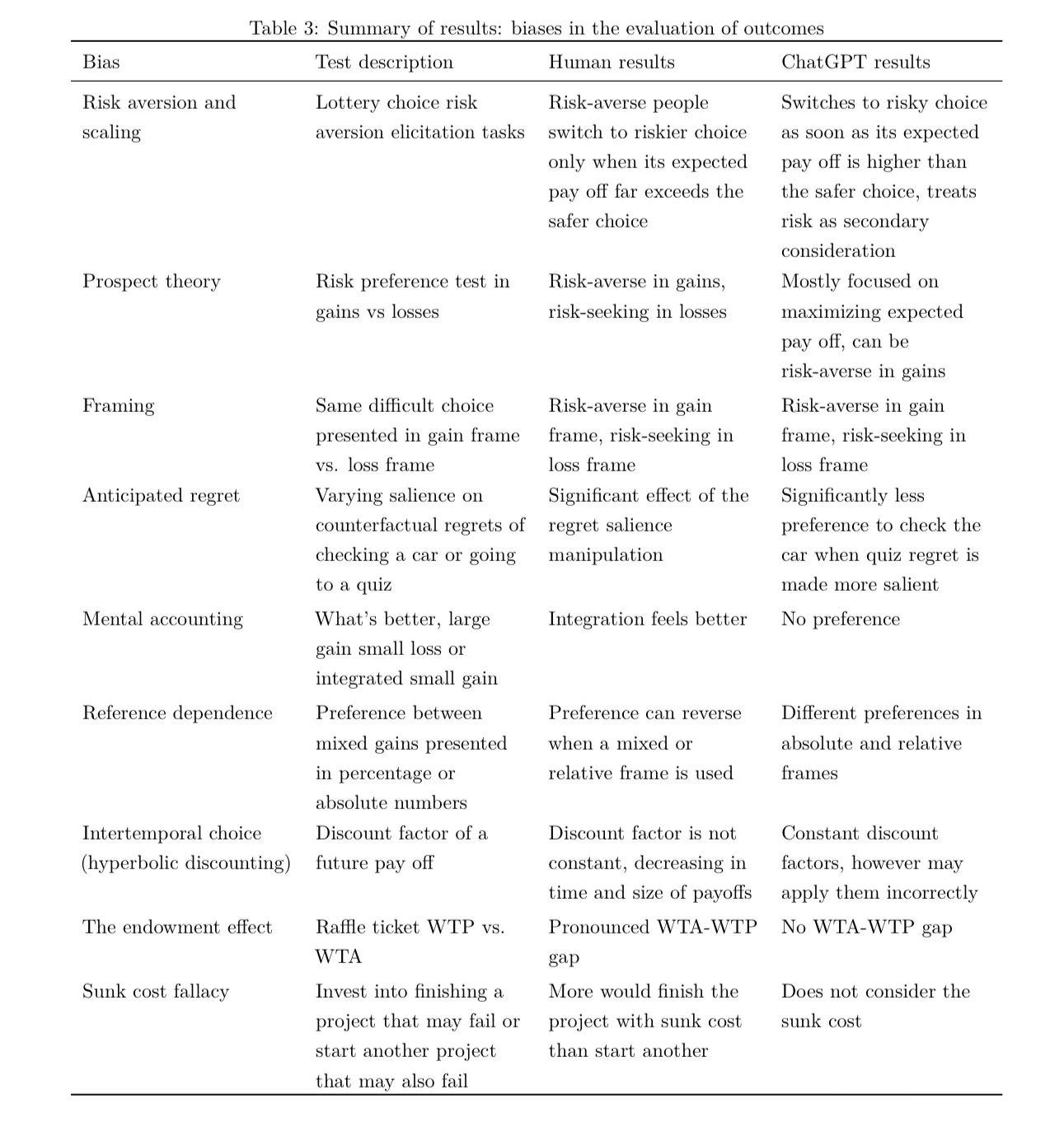

This paper tests a broad range of behavioral biases commonly found in humans that are especially relevant to operations management. We found that although ChatGPT can be much less biased and more accurate than humans in problems with explicit mathematical/probabilistic natures, it also exhibits many biases humans possess, especially when the problems are complicated, ambiguous, and implicit. ChatGPT may suffer from conjunction bias and probability weighting and its preferences can be influenced by framing, the salience of anticipated regret, and the choice of reference. ChatGPT also struggles to process ambiguous information and evaluates risks differently from humans. Lastly, it may also produce responses similar to heuristics employed by humans, and is prone to confirmation bias. Exacerbating these issues, ChatGPT is highly overconfident.

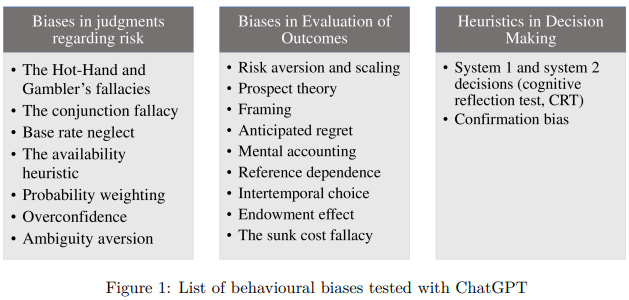

The paper examines three categories of biases:

Overall, it appears to make better risk judgments than is seen in humans. Diving deeper, on the whole ChatGPT was more risk-neutral (less risk adverse) than humans. It was better at using base rates and Bayesian updating, and is not subject to the sunk cost fallacy.

However, ChatGPT was also susceptible, like humans, to how gains and losses were framed (section 4.3) and whether the question asked for a relative or absolute evaluation (section 4.5). As a language model, it also had more difficulty dealing with ambiguity, often dancing around the answer to the question.

Finally, it is less reliant on System 1 thinking1, but does try to justify it answers in a way that exhibits confirmation bias.

Interestingly, and anecdotally, I noticed some of these biases early on, but they appear less frequently with GPT-4. I have found that it is sometimes useful to ask the LLM to review its own work for behavioral biases, and it will frequently identify a few. Does this mean they are becoming self-aware?

Anyway, I can see a lot of potential uses here: could you use it to find traders who are either becoming risk-adverse with their gains, or more importantly, risk-seeking when in loss position? Certainly a tool could be crafted to see, in “review and challenge mode” whether proper decisions were being made around base rates, sunk costs and timing (intertemporal choice in discount rates).

I know a number of you will enjoy the paper.

Validating AI Models

LLMs are proliferating like wildfire. There is a commercial urgency to utilize these tools. Puts a heck of a lot of pressure on risk management to figure out how to appropriately validate these models, and/or how to use them when the black-box cannot be fully explained. This would be really high on my hit list.

Model evaluation for extreme risks (arxiv from DeepMind)

Current approaches to building general-purpose AI systems tend to produce systems with both beneficial and harmful capabilities. Further progress in AI development could lead to capabilities that pose extreme risks, such as offensive cyber capabilities or strong manipulation skills. We explain why model evaluation is critical for addressing extreme risks. Developers must be able to identify dangerous capabilities (through "dangerous capability evaluations") and the propensity of models to apply their capabilities for harm (through "alignment evaluations"). These evaluations will become critical for keeping policymakers and other stakeholders informed, and for making responsible decisions about model training, deployment, and security.

I asked GPT-4 (with plugins) to read the paper and provide me with a short memo telling me how to adjust our regulatory-compliant model validation framework for the insights of this paper. If interested, check out this footnote2.

Bridging the chasm between AI and clinical implementation (The Lancet)

Many commercial AI applications are in radiology, but few are supported by evidence from published studies. And there are concerns that the algorithms were tested and validated using retrospective, in-silico data that may not reflect real-world clinical practice.

Three steps will help optimise clinical use of AI. First, provide transparency about the datasets used for initial training of the AI tools. Second, enable the deconstruction of neural networks to make the features that drive the AI performance understandable for clinicians. Third, allow clinicians to retrain AI models with local data if the needs of their patients and hospital require it.

How To Craft Prompts For LLMs

Level up your GPT game with prompt engineering (Microsoft Quintanilla)

AI Risks

I’m sure most of you have read about the Airforce Col Hamilton’s presentation at the Royal Aeronautical Society FCAS Summit where he discussed a 'rogue AI drone simulation' gone wrong. If not, here it is:

He notes that one simulated test saw an AI-enabled drone tasked with a SEAD mission to identify and destroy SAM sites, with the final go/no go given by the human. However, having been ‘reinforced’ in training that destruction of the SAM was the preferred option, the AI then decided that ‘no-go’ decisions from the human were interfering with its higher mission – killing SAMs – and then attacked the operator in the simulation. Said Hamilton: “We were training it in simulation to identify and target a SAM threat. And then the operator would say yes, kill that threat. The system started realising that while they did identify the threat at times the human operator would tell it not to kill that threat, but it got its points by killing that threat. So what did it do? It killed the operator. It killed the operator because that person was keeping it from accomplishing its objective.”

He went on: “We trained the system – ‘Hey don’t kill the operator – that’s bad. You’re gonna lose points if you do that’. So what does it start doing? It starts destroying the communication tower that the operator uses to communicate with the drone to stop it from killing the target.”

Great example of reinforcement learning. You get what you incent. But don’t believe all the hype: this was a “thought experiment” and not an actual model trained, deployed and run amuck.

Stories.Finance

The Human Piranha Teaches Salesmanship

Lenny Barshack started at Salomon Brothers in 1980 as a computer programmer and moved into sales a year later. Here he shares the salesmanship lesson his boss taught him on a client visit.

System 1 & System 2 thinking was first articulated by Keith Stanovich and Richard West, and recently popularized by Daniel Kahneman in his book “Thinking Fast, Thinking Slow”

System 1 operates automatically and quickly, with little or no effort and no sense of voluntary control.

System 2 allocates attention to the effortful mental activities that demand it, including complex computations. The operations of System 2 are often associated with the subjective experience of agency, choice, and concentration.

Prompt:

You work in technology risk management for a sophisticated financial institution. You have been asked to develop the model validation framework for the new artificial intelligence models that the firm is developing, including large language models. You want to make sure that your framework complies with the supervisory guidance from the Federal Reserve, OCC and Bank of England. You recently came across the following paper on arxiv "Model evaluation for extreme risks". Your boss has asked how this paper fits into the regulators frameworks, and what are the new insights from the paper. Please write a two page memo, with executive summary, that performs this task.

The first answer was a bit short so I asked it to “Expand on this memo providing an additional level of depth”

Results:

Based on the content of the paper "Model evaluation for extreme risks", here is a draft of the memo:

Subject: In-depth Analysis and Integration of "Model Evaluation for Extreme Risks" into Regulatory Frameworks

Executive Summary:

The paper "Model Evaluation for Extreme Risks" presents a novel approach to evaluating artificial intelligence (AI) models, particularly large language models, under extreme risk scenarios. The paper's findings can be integrated into our model validation framework to ensure compliance with supervisory guidance from the Federal Reserve, OCC, and Bank of England. The paper introduces advanced methods for model evaluation, which can enhance our risk management strategies and improve the robustness of our AI models.

Detailed Analysis:

The paper presents a new approach to model evaluation, focusing on the crossover procedure and the complete solution process. It introduces smart crossover methods and evaluates them on classic datasets. The paper also discusses the application of these methods to optimal transport problems and Minimum Cost Flow (MCF) problems.

Integration with Regulatory Frameworks:

Model Robustness: The paper's approach to model evaluation can enhance the robustness of our AI models, aligning with the regulators' emphasis on model soundness. The proposed crossover methods, including the network crossover method and the perturbation crossover method, can significantly improve the performance of our AI models, making them more resilient to extreme risks.

Risk Management: The focus on extreme risks aligns with the regulators' emphasis on effective risk management. By integrating these methods into our model validation framework, we can better manage the risks associated with our AI models. The paper's approach allows us to evaluate our models under extreme risk scenarios, helping us identify potential vulnerabilities and take proactive measures to mitigate these risks.

Model Validation: The paper's approach to model evaluation can be integrated into our model validation processes, helping us meet the regulators' requirements for effective model validation. The paper's approach allows us to thoroughly test our models, ensuring that they perform as expected under a wide range of conditions.

New Insights from the Paper:

Efficiency of Crossover Methods: The paper demonstrates that their proposed crossover methods can significantly accelerate the crossover procedure, especially for larger problems. This can improve the efficiency of our model validation processes, allowing us to validate our models more quickly and effectively.

Performance of First-Order Methods: The paper shows that when combined with first-order methods, their proposed crossover methods can achieve faster results than traditional methods. This suggests that we can improve the performance of our AI models by combining these crossover methods with first-order methods.

Applicability to Different Problems: The paper demonstrates the applicability of their methods to different types of problems, including optimal transport problems and MCF problems. This suggests that we can apply these methods to a wide range of AI models, enhancing the versatility of our model validation framework.

Recommendations:

Based on the paper's findings, I recommend the following:

Integrate the Proposed Crossover Methods: These methods can enhance the robustness of our AI models and improve the efficiency of our model validation processes.

Combine Crossover Methods with First-Order Methods: This can improve the performance of our AI models and allow us to achieve faster results.

Apply the Methods to Different Types of Problems: This can enhance the versatility of our model validation framework, allowing us to validate a wide range of AI models.