Perspective on Risk - Apr. 23, 2025 (Yet Another AI Post)

Artificial General Intelligence; I’ve Seen The Future; Recursive Self-Improvement & Artificial Super Intelligence; Immanentizing The Eschaton; LLMs At Work; Risk-Managing AGI; Robots; Space-Time

At/Approaching Artificial General Intelligence

On some tests, Large Language Models now match or exceed the performance of of most people.

Fluid IQ: Raven’s Progressive Matrices (RPM) is often used as the "purest" test of general intelligence — pattern recognition, logic, abstraction — and does not rely on language. The average adult human score (scaled to IQ) is ~100 by design; high-performing college students typically fall in the 115–125 range, and exceptional thinkers, engineers, or PhDs might score 130–145. Claude 3 Opus has shown RPM-like task performance equivalent to an IQ of ~135–140, with chain-of-thought prompting. GPT-4 comes in just below that — roughly ~130–135 IQ-equivalent in similar tasks.1

Big-Bench Hard (BBH) is a composite of extremely hard problems in logic, math, social reasoning, and linguistics — crafted to stump earlier LLMs and challenge humans. Smart undergrads / early PhDs tend to perform at ~60–70% accuracy on many BBH tasks. Claude 3 Opus and GPT-4 now score in the ~80–85% range on many BBH tasks.2

Law: GPT-4 (OpenAI) and Claude 3 Opus (Anthropic) scored in the top 10% of test-takers on the Uniform Bar Exam. Gemini 1.5 Pro (Google) also passes, but is slightly behind GPT-4 and Claude.3

Medicine: GPT-4 passes all three steps of the Medical Licensing Exams (USMLE). It shows particular strength in diagnostic reasoning and pharmacology. Claude 3 Opus also passes all steps with comparable or better contextual judgment, especially on complex case presentations. Gemini 1.5 Pro again also passes, but is slightly behind.4

Math: GPT-4 and Claude 3 Opus perform at approximately PhD admissions level in undergraduate and early graduate math — but struggle with proofs and original derivations. 5

Economics: GPT-4 and Claude 3 can handle graduate-level micro, macro, and econometrics, though both can misapply edge-case assumptions or confuse equilibrium concepts when unstated.6

Sciences: Massive Multitask Language Understanding (MMLU). This test includes 57 subjects, like physics, medicine, law, math, and history — across high school to professional level.

Physics: College graduates score at 65–70%, and human experts at 85 –90% in their specialty. The three aforementioned LLMs score between 78-85%.7

Chemistry: Chemistry undergrads score 70–80%; Chemistry PhDs score 85–90%. Claude 3 / GPT-4 score 85–86%.8

Biology: Biology undergrads score 75–85%, Biology PhDs score 90–95%. Claude 3 / GPT-4 ~88–90% 9

Psychology: Psychology majors score 75–85%, Psychology PhDs score 85–95%, and Claude 3 / GPT-4 89–90%.10

Theory of Mind Tasks: Claude 3 and GPT-4 can pass basic Theory of Mind (ToM) tasks, but struggle with 2nd-order false beliefs (e.g., “She thinks that he thinks…”). Some studies liken LLM ToM capabilities to those of ~7–10-year-old humans.11

Moral and Ethical Judgment (MoralScenarios, ETHICS dataset): Claude 3 Opus often judged as more consistent than humans.12

And the price of said intelligence is rapidly dropping.

I’ve Seen The Future

Who says Federal Reserve economists don’t have a sense of humor! In Hedging The AI Singularity (Github), Andrew Chen and his LLM coauthors (o1, Sonnet, and ChatGPT Deep Research) wrote a paper that “demonstrates how AI stocks can command premium valuations when they serve as hedges against scenarios where AI breakthroughs reduce household welfare while increasing AI assets’ share of the economy.”

Andrew Chen didn’t just write a paper about using AI assets to hedge against human obsolescence. He used AI to write the paper itself.

This isn’t just clever or ironic. It’s a recursive, meta-level act of hedging:

Writing a paper with LLMs about hedging the AI singularity is itself a hedge against the AI singularity.

The actual model—Barro-Rietz-style rare disaster with AI stocks—is clean and well-crafted. But the true originality of the paper lies in its form:

A paper about hedging the AI singularity

Written by the very agents that would cause it

Co-authored by the human trying to hedge against them

Published as an act of hedging

It’s not just academic work. It’s a philosophical performance, a portfolio allocation, and a survival instinct—all in one.

My only complaint is that this is not on the Fed’s own website!

BTW, Claude got the meta-significance of the paper quicker than 01-Sonnet

Recursive Self-Improvement

We believe as an industry that in the next one year the vast majority of programmers will be replaced by AI programmers. We also believe that within one year you will have graduate level mathematicians that are at the tippy top of graduate math programs. …

What happens in two years? Well I've just told you about reasoning and I've told you about programming and I've told you about math programming plus math are the basis of sort of our whole digital world so the evidence and the claims from the research groups in OpenAI and Anthropic and so forth is that they're now somewhere around 10 or 20% of the code that they're developing in their research programs is being generated by the computer. That's called recursive self-improvement …

What happens when this thing starts to scale? Well a lot - one way to say this is that within 3 to 5 years we'll have what is called general intelligence AGI …

It gets much more interesting after that because remember the computers are now doing self-improvement - they're learning how to plan and they don't have to listen to us anymore. We call that … ASI artificial super intelligence and this is the theory that there will be computers that are smarter than the sum of humans the San Francisco. Conventional consensus is this occurs within six years just based on scaling this path. — Eric Schmidt

Immanentizing The Eschaton

LLMs At Work

Teams + AI

This is important for the managers out there.

The Cybernetic Teammate: A Field Experiment on Generative AI Reshaping Teamwork and Expertise

Related Substack post: The Cybernetic Teammate

From the paper:

We examine how artificial intelligence transforms the core pillars of collaboration— performance, expertise sharing, and social engagement—through a pre-registered field experiment with 776 professionals at Procter & Gamble…

From Substack:

When working without AI, teams outperformed individuals by a significant amount, 0.24 standard deviations (providing a sigh of relief for every teacher and manager who has pushed the value of teamwork).

Individuals working with AI performed just as well as teams without AI, showing a 0.37 standard deviation improvement over the baseline. This suggests that AI effectively replicated the performance benefits of having a human teammate – one person with AI could match what previously required two-person collaboration.

Teams with AI performed best overall with a 0.39 standard deviation improvement, though the difference between individuals with AI and teams with AI wasn't statistically significant.

… when looking at truly exceptional solutions, those ranking in the top 10% of quality. Teams using AI were significantly more likely to produce these top-tier solutions, suggesting that there is value in having human teams working on a problem that goes beyond the value of working with AI alone.

Without AI, we saw clear professional silos in how people approached problems. … When these specialists worked together in teams without AI, they produced more balanced solutions through their cross-functional collaboration (teamwork wins again!). But … when paired with AI, both R&D and Commercial professionals, in teams or when working alone, produced balanced solutions that integrated both technical and commercial perspectives.

Good Leaders Can Lead AI Agents Too

Measuring Human Leadership Skills with AI Agents (NBER)

We show that leadership skill with artificially intelligent (AI) agents predicts leadership skill with human groups. … Successful leaders of both humans and AI agents ask more questions and engage in more conversational turn-taking; they score higher on measures of social intelligence, fluid intelligence, and decision-making skill, but do not differ in gender, age, ethnicity or education. Our findings indicate that AI agents can be effective proxies for human participants in social experiments, which greatly simplifies the measurement of leadership and teamwork skills.

Despite LLMs, People Still Like To Go To Meetings

Shifting Work Patterns with Generative AI (arXiv)

We present evidence on how generative AI changes the work patterns of knowledge workers using data from a 6-month-long, cross-industry, randomized field experiment. … We find that access to the AI tool during the first year of its release primarily impacted behaviors that could be changed independently and not behaviors that required coordination to change: workers who used the tool spent 3 fewer hours, or 25% less time on email each week (intent to treat estimate is 1.4 hours) and seemed to complete documents moderately faster, but did not significantly change time spent in meetings.

First They Came For The Programmers, But I was Not A Programmer

link (SFW)

Risk Managing Artificial General Intelligence

Regulators Are Concerned

In Safe Havens May Not Be, Levine writes about “bad AI.”

… if you are training your AI model by rewarding it for finding stocks that go up, and if you don’t really understand how it predicts stocks that will go up, eventually it might get it into its little AI head that it can make the stocks go up by doing some sort of subtle market manipulation, and that this is what you want. And then maybe it will do it.

BOE Warns Deviant AI on Trading Floors Risks Triggering a Crash (Bloomberg)

The central bank’s Financial Policy Committee warned that the technology could destabilize markets or act in other adverse ways in a new report on AI published Wednesday. It added that AI was making such rapid headway among hedge funds and other trading firms that humans may soon not understand what the models are doing.

“Models with sufficient autonomy could act in ways that are detrimental to the overall stability or integrity of markets, for example by ignoring regulatory or legal guardrails such as market abuse regulations,” according to the report. “Human managers would also need to manage such regulatory risks.”

Financial Stability in Focus: Artificial intelligence in the financial system (Bank of England)

Greater use of AI in banks’ and insurers’ core financial decision-making (bringing potential risks to systemic institutions).

Greater use of AI in financial markets (bringing potential risks to systemic markets).

Operational risks in relation to AI service providers (bringing potential impacts on the operational delivery of vital services).

Changing external cyber threat environment.

AI, Fintechs, and Banks (Fed. Gov. Barr)

I believe that the bank–fintech relationship has the potential to accelerate adoption of Gen AI in financial services. This may come in the form of direct competition, with fintechs taking market share from banks for certain products, or banks crowding out fintechs by introducing better technology into their existing or new product lines. … Alternatively, fintechs and banks may enter into a symbiotic relationship, forming collaborative partnerships where fintechs and banks merge their strengths.

To the extent banks are using Gen AI or offering Gen AI products and services, they have the responsibility to manage their risk, and should use their relationships to incentivize good risk management practices for fintechs.8 This means choosing fintech partners that provide transparency and clarity regarding the development of AI tools and have demonstrated appropriate control in deployment.

With respect to Gen AI, it is important for fintechs and banks to tackle questions like who owns the customer data, and potential conflicts that may arise if a bank's customer data are fed back into a fintech's Gen AI model.

Fintech developers should, for example, prioritize identifying biases in training data sets and monitoring outputs in order to prevent those biases from amplifying inequalities or mispricing risks.

[Regulators] should review and update existing standards on model risk management

Related: The Most Damaging “Guidance” in Banking (BPI)

As the incoming Administration looks for ways to rationalize regulation … one … candidate … [is] the federal banking agencies’ Model Risk Management Guidance. It is a set of check-the-box instructions on how banks should validate and document the models they use across all aspects of their operations. It has disrupted bank operations; created a massive compliance bureaucracy; hampered banks’ efforts to make effective use of AI; and impeded banks from innovating in everything from lending to anti-money laundering monitoring to cybersecurity. Its application has also varied greatly from examiner to examiner. Moreover, that manual is now 14 years old, and has never been updated to reflect the revolution in computer analysis that has occurred since its adoption.

The Model Guidance should be withdrawn and re-proposed for public comment. … And it needs to allow for banks to pilot and test new models without fear of sanction.

Here Is A Good Guide To Help You

Taking a responsible path to AGI (Google Deepmind)

AGI Safety Course (Youtube)

Robots

Chinese robots ran against humans in the world’s first humanoid half-marathon. They lost by a mile (CNN)

More than 20 two-legged robots competed in the world’s first humanoid half-marathon in China on Saturday, and – though technologically impressive – they were far from outrunning their human masters over the long distance.

The robots were pitted against 12,000 human contestants, running side by side with them in a fenced-off lane.

After setting off from a country park, participating robots had to overcome slight slopes and a winding 21-kilometer (13-mile) circuit before they could reach the finish line, according to state-run outlet Beijing Daily.

The first robot across the finish line, Tiangong Ultra – created by the Beijing Humanoid Robot Innovation Center – finished the route in two hours and 40 minutes. That’s nearly two hours short of the human world record of 56:42, held by Ugandan runner Jacob Kiplimo. The winner of the men’s race on Saturday finished in 1 hour and 2 minutes.

This seems like a good benchmark to follow: how long until an independent robot can win a half-marathon?

An Interesting Space-Time Digression

(written with the assistance of ChatGPT)

I kinda love thinking and reading about space-time.

I think most people remember from their primary school days that the universe is expanding (Hubble constant; first derivative of space wrt time).

Some people who pay attention may have heard that, surprisingly, the rate of the expansion of the universe is accelerating! (Deceleration; second derivative).

Most recently, researchers found evidence that this rate of expansion (Jerk; 3rd derivative) may be slowing!!

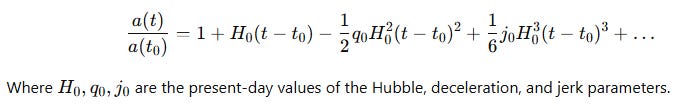

I theory, then. we could express this as a Taylor series expansion. It would take this form:

We can add in our best estimates of each of the parameters

Cool, right?

But there’s a problem, according to a recent preprint, Dynamical Dark Energy, Dual Spacetime, and DESI, that purports to use string theory to explain the existence of ‘dark matter,’ and with results that align pretty closely with the above parameters.

In their model (according to ChatGPT)

In this string theory model, space and time are more like a quilt made of overlapping patches, and you can’t cleanly say where things are or how they’re changing using the usual tools—because the fabric itself has a kind of "quantum fuzziness."

This results, according to the article I link to below, with the problem.

By replacing the Standard Model's description of particles with the framework from string theory, the researchers found that space-time itself is inherently quantum and noncommutative, meaning the order in which coordinates appear in equations matters.

The key word there is ‘noncommutative.’ If the system is non-cumulative, the idea that “adding the next term makes things better” breaks down.

In string theory, perturbative expansions are often asymptotic rather than convergent. That means the first few terms may approximate the behavior of the system, but the series diverges eventually, and higher-order terms may oscillate or worsen the approximation.

Not to despair, however, because in cosmology, Taylor series work well (locally in time) because the expansion is relatively smooth and differentiable. In quantum gravity and string theory, the math gets trickier.

Anyway, this article, Scientists claim to find 'first observational evidence supporting string theory,' which could finally reveal the nature of dark energy, does a good job of explaining the findings in somewhat accessible terms.

https://github.com/suzgunmirac/BIG-Bench-Hard?utm_source=chatgpt.com