Perspective on Risk - May 18, 2024 (AI Stuff)

Exponential Effect; Major Developments; LLMs & and Organizational Design; Moravec's paradox; AI in Supervision; and in medicine; Go NY Go NY Go!

Caught Covid (for the first time) earlier this week, so I’ve had time to finalize one last divergence before I revert to the more boring finance and banking stuff. Technology is one of my “big 3” forces (along with demographics and globalization) that will shape future returns.

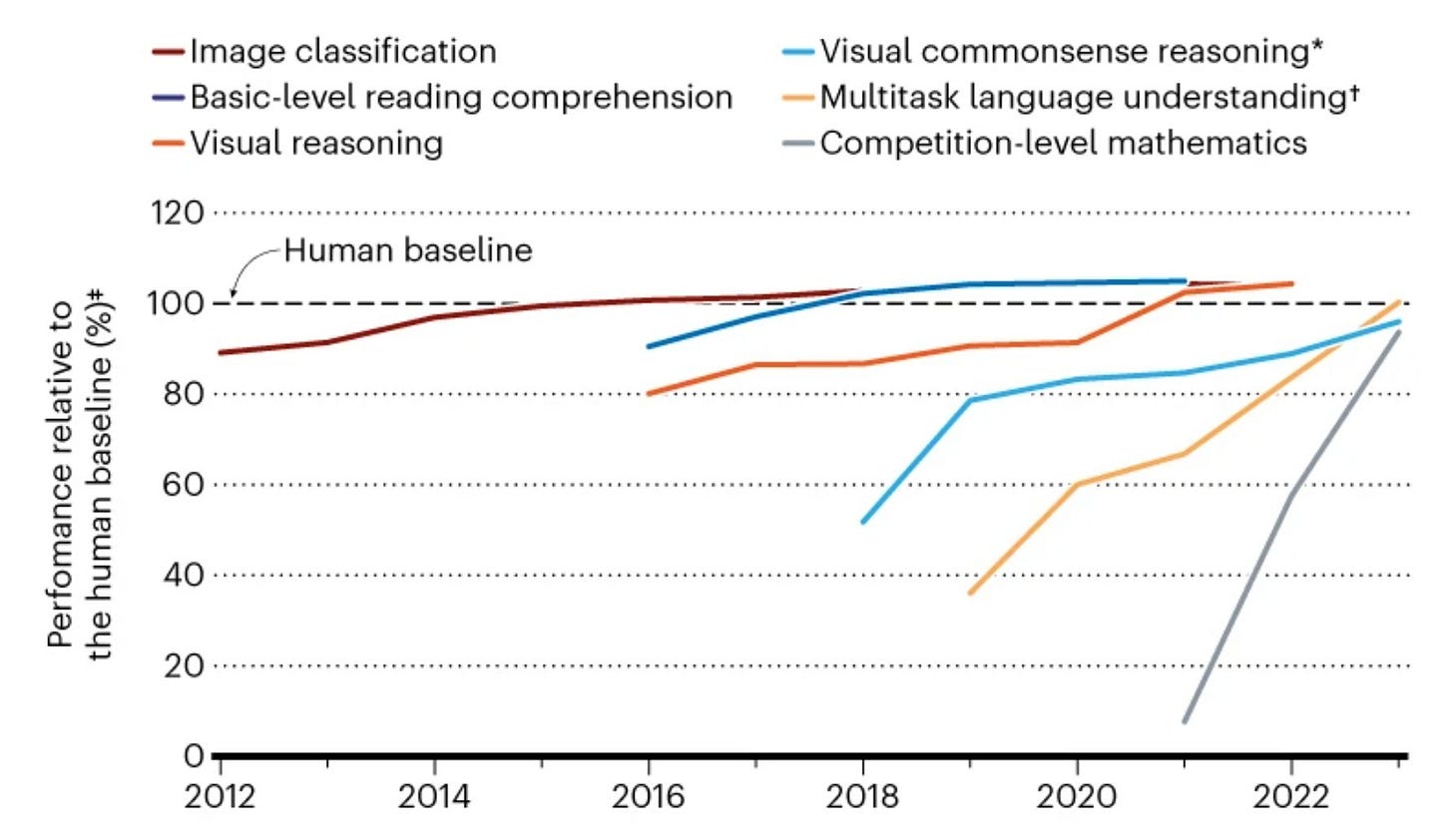

The Exponential Effect Is About To Hit Home

I’ve written before about the power of exponentials. It is something that Peter Hancock tried to teach AIG. The biggest exponential in in technology, and I think specifically this AI/LLM wave.

On some scale, technology has felt linear to many of us. Sure we went from 5-1/4” to 3.5” floppy disks, to CD-ROM, to thumb drives to now just virtual, but this is just an improvement of an existing function (storage). Now we are on the verge of something different.

Let’s give an example. You have a business with $1,000 in revenues that grows a mere 1% per day. After a week, your revenue will be up ~$72 to 1,072. After a month, you feel like you are really cooking; revenue is up to ~$1,349. But by the end of the year, if the mere 1% growth rate continues, your daily revenue will be up to ~$37,783. We are at the one or two month mark now.

Ezra Klein had a nice interview with Dario Amodei. Dario led the OpenAI creation of GPT-2 before setting out to start Anthropic.

They start out discussing this observation from Ezra:

So there are these two very different rhythms I’ve been thinking about with A.I. One is the curve of the technology itself, how fast it is changing and improving. And the other is the pace at which society is seeing and reacting to those changes.

They then list a number of steps where the technology advanced, but public awareness didn’t until the release of GPT-3; the underlying technology is a smooth exponential, but the awareness is a step-function.

But the big thing to me was HOW FAST this next generation of change will be.

EK: So when you think about these break points and you think into the future, what other break points do you see coming where A.I. bursts into social consciousness or used in a different way?

DA: The models taking actions in the world is going to be a big one. … Instead of just, I ask it a question and it answers, and then maybe I follow up and it answers again … the model being able to do things end to end or going to websites or taking actions on your computer for you.

I think all of that is coming in the next, I would say — I don’t know — three to 18 months, with increasing levels of ability.

So far, it’s been this very passive — it’s like, I go to the Oracle. I ask it a question, and the Oracle tells me things.

This is agentic models; we’ll talk more about that shortly.

DA: In terms of what we need to make it work, one thing is, literally, we just need a little bit more scale. And I think the reason we’re going to need more scale is — to do one of those things you described (such as fully automating the planning and reservations of a birthday party) can easily get 20 or 30 layers deep.

the industry is going to get a new generation of models every probably four to eight months. And so, my guess — I’m not sure — is that to really get these things working well, we need maybe one to four more generations. So that ends up translating to 3 to 24 months or something like that.

You may have heard talk about the researchers being scared about the capabilities of the models to do harm, or assist those seeking to do harm. Now before I give you the quotes, here is an excerpt from Anthropic Responsible Scaling Policy that will help you understand the jargon:

ASL-1 refers to systems which pose no meaningful catastrophic risk, for example a 2018 LLM or an AI system that only plays chess.

ASL-2 refers to systems that show early signs of dangerous capabilities – for example ability to give instructions on how to build bioweapons – but where the information is not yet useful due to insufficient reliability or not providing information that e.g. a search engine couldn’t. Current LLMs, including Claude, appear to be ASL-2.

ASL-3 refers to systems that substantially increase the risk of catastrophic misuse compared to non-AI baselines (e.g. search engines or textbooks) OR that show low-level autonomous capabilities.

ASL-4 and higher (ASL-5+) is not yet defined as it is too far from present systems, but will likely involve qualitative escalations in catastrophic misuse potential and autonomy.

EZRA KLEIN: When you imagine how many years away, just roughly, A.S.L. 3 is and how many years away A.S.L. 4 is, right, you’ve thought a lot about this exponential scaling curve. If you just had to guess, what are we talking about?

DARIO AMODEI: Yeah, I think A.S.L. 3 could easily happen this year or next year. I think A.S.L. 4 —

EZRA KLEIN: Oh, Jesus Christ.

DARIO AMODEI: No, no, I told you. I’m a believer in exponentials. I think A.S.L. 4 could happen anywhere from 2025 to 2028.

EZRA KLEIN: So that is fast.

DARIO AMODEI: Yeah, no, no, I’m truly talking about the near future here. I’m not talking about 50 years away.

Major Developments

OpenAI Still Leads, But The Gap Is Closing (at least until we see GPT-5)

We see three different major strategies; OpenAI is developing one model capable of doing everything, Google is developing specialized domain-specific models, and Meta is encouraging open-source architecture.

Retrieval Augmented Generation (RAG)

As I mentioned in previous AI/LLM posts, the next big evolution of these models is agented workflow. Simply, think of a lead model delegating tasks to other sub-models.

The training of a LLM takes considerable time and compute power, and once trained is a bit ‘static’ in its knowledge. The way this is overcome is through Retrieval Augmented Generation (RAG). The easiest way to think of RAG is that the LLM is using the internet (or internal databases) to look up current (or proprietary) information. For those looking to dive deeper on RAG, here are two source articles.

Almost an Agent: What GPTs can do

A Survey on RAG Meets LLMs: Towards Retrieval-Augmented Large Language Models

Huge Increase in Token Input Context Window

Larger input context windows make the LLMs much more useful. Gemini Pro 1.5 has increased the size of its input window to 2 million tokens.

A larger input window leads to more coherent and contextually relevant outputs. With a larger input window, the model can capture and learn from long-term dependencies in the training data, which is crucial for tasks that require understanding and maintaining context over longer spans of text. Tasks that require reasoning, understanding of complex relationships, or the ability to draw insights from a larger context can benefit from a larger input window. The model can consider more information simultaneously, potentially leading to better performance on these types of tasks.

For context, GPT-3 had a context window of 2,048 tokens. 2 million tokens is the equivalent of several full length books, or thousands of Wikipedia articles.

Multi-Token Prediction

Better & Faster Large Language Models via Multi-token Prediction

Large language models such as GPT and Llama are trained with a next-token prediction loss. In this work, we suggest that training language models to predict multiple future tokens at once results in higher sample efficiency.

Native Multi-Modal Capabilities

A user on Twitter sent this video clip to GPT-4o and asked it to watch and analyze a 9 second clip from the Knicks-Pacers game. Go ahead and watch the clip.

https://twitter.com/i/status/1789495371850264868 (9 seconds)

All of these features we are starting to see appear — lower prices, higher speeds, multimodal capability, voice, large context windows, agentic behavior — are about making AI more present and more naturally connected to human systems and processes. If an AI that seems to reason like a human being can see and interact and plan like a human being, then it can have influence in the human world. This is where AI labs are leading us: to a near future of AI as coworker, friend, and ubiquitous presence.

Moravec's Paradox

Moravec's paradox is the observation in artificial intelligence and robotics that, contrary to traditional assumptions, reasoning requires very little computation, but sensorimotor and perception skills require enormous computational resources.

It is comparatively easy to make computers exhibit adult level performance on intelligence tests or playing checkers, and difficult or impossible to give them the skills of a one-year-old when it comes to perception and mobility

Large Language Models and Organizational Design

NBER Organizational Economics Meeting, Spring 2024

How does Artificial Intelligence (AI) affect the organization of work? We incorporate AI into an economy where humans endogenously sort into hierarchical firms: Less knowledgeable agents become “workers” (execute routine tasks), while more knowledgeable agents become “managers” (specialize in problem-solving). We model AI as an algorithm that uses computing power to mimic the behavior of humans with a given knowledge. We show that AI not only leads to occupational displacement but also changes the endogenous matching between all workers and managers. This leads to new insights regarding AI’s effects on productivity, firm size, and degree of decentralization

A.I. In Bank Supervision

Leveraging language models for prudential supervision (Bank Underground)

Currently, a pioneering effort is under way to fill this gap by developing a domain-adapted model known as Prudential Regulation Embeddings Transformer (PRET), specifically tailored for financial supervision. PRET is an initiative to enhance the precision of semantic information retrieval within the field of financial regulations. PRET’s novelty lies in its training data set: web-scraped rules and regulations from the Basel Framework that is pre-processed and transformed into a machine-readable corpus, coupled with LLM-generated synthetic text. This targeted approach provides PRET with a deep and nuanced understanding of the Basel Framework language, overlooked by broader models.

There are so many potential uses; financial condition prediction, policy compliance with regulatory guidelines. Still, should just be a tool for a good examiner, not a substitute (we’ll wait for the robots for that).

A.I. in Medicine Advancing Quickly

AlphaFold 3

Google DeepMind’s New AlphaFold AI Maps Life’s Molecular Dance in Minutes

This week, DeepMind and Isomorphic Labs released a big new update that allows the algorithm to predict how proteins work inside cells. Instead of only modeling their structures, the new version—dubbed AlphaFold 3—can also map a protein’s interactions with other molecules.

Med-Gemini Models Expanded

Google Research expanded the capabilities of its AI models for Med-Gemini-2D, Med-Gemini-3D and Med-Gemini Polygenic. Google said it fine-tuned Med-Gemini capabilities using histopathology, dermatology, 2D and 3D radiology, genomic and ophthalmology data.

Capabilities of Gemini Models in Medicine

Advancing Multimodal Medical Capabilities of Gemini

Med-Gemini-2D sets a new standard for AI-based chest X-ray (CXR) report generation based on expert evaluation, exceeding previous best results across two separate datasets by an absolute margin of 1% and 12%, where 57% and 96% of AI reports on normal cases, and 43% and 65% on abnormal cases, are evaluated as “equivalent or better” than the original radiologists’ reports.

We demonstrate the first ever large multimodal model-based report generation for 3D computed tomography (CT) volumes using Med-Gemini-3D, with 53% of AI reports considered clinically acceptable, although additional research is needed to meet expert radiologist reporting quality.

In histopathology, ophthalmology, and dermatology image classification, Med-Gemini-2D surpasses baselines across 18 out of 20 tasks and approaches task-specific model performance.

Beyond imaging, Med-Gemini-Polygenic outperforms the standard linear polygenic risk score-based approach for disease risk prediction and generalizes to genetically correlated diseases for which it has never been trained.

CRISPR-GPT

CRISPR-GPT is an LLM agent that helps humans design and implement gene editing experiments, providing expert guidance across the entire experimental pipeline.

CRISPR-GPT: An LLM Agent for Automated Design of Gene-Editing Experiments

We introduce CRISPR-GPT, an LLM agent augmented with domain knowledge and external tools to automate and enhance the design process of CRISPR-based gene-editing experiments. CRISPR-GPT leverages the reasoning ability of LLMs to facilitate the process of selecting CRISPR systems, designing guide RNAs, recommending cellular delivery methods, drafting protocols, and designing validation experiments to confirm editing outcomes. We showcase the potential of CRISPR-GPT for assisting non-expert researchers with gene-editing experiments from scratch and validate the agent's effectiveness in a real-world use case.