Perspective on Risk - July 18, 2024 (Forecasting)

Forecasting Is Hard; Financial Stability; LLMs; Overconfidence; 3 Types Of Bayesians; Measuring Outrage; Caitlin Clark; Measuring Luck; Algorithms; Tetlock’s Judgment Matrix

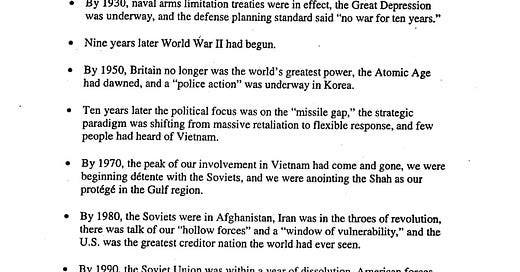

Forecasting Is Hard, Especially About The Future

Forecasting Financial Stability

Anna Kovner, Director of Financial Stability Policy Research at the NY Fed, delivered remarks at the Forecasters Club of New York.

Methodology

… an economic shock can trigger a self-reinforcing loop as margin calls impair credit provision [that] lower asset prices and depress economic activity. … financial crises lead to more severe economic downturns ... It is this nonlinear response that creates financial stability concerns and that we seek to gauge in the financial stability outlook.

One approach to quantifying financial stability risks is to estimate the probability of very bad outcomes in real economic variables such as GDP and inflation using quantile regressions that measure the relationship of these variables in bad times instead of just on average.

One way of visualizing the financial stability outlook is to look at the size of the tail of the GDP growth distribution. … when the bad tail is wide, the economy is vulnerable.

A second approach to capturing hidden risks to economic outcomes is examining patterns in the financial system historically associated with the amplification of negative real shocks both in the U.S. and internationally. The Federal Reserve’s Financial Stability Report is anchored in this type of monitoring.

The first vulnerability is high asset prices relative to fundamentals. … The second vulnerability is business and household leverage. … The third and fourth vulnerabilities arise from the financial sector, from both its leverage and the extent of maturity and liquidity transformation.

Financial Stability Vulnerabilities in 2024 and Beyond

I am keeping my eyes on four key financial stability vulnerabilities for 2024 and beyond.

While the banking industry overall is sound and resilient, changes in interest rates have negatively affected the value of long-duration assets and the impact of revaluations of commercial real estate has been unevenly distributed.

A second concern emerging from higher rates is the acceleration of deposits into prime money market funds and stablecoins,

My third area of focus is U.S. Treasury markets. … Treasury market liquidity has been somewhat strained across almost all measures for some time, including bid-ask spreads, market depth, and price impact.

Finally, a key vector for financial vulnerability is cyberattacks. These have been occurring at accelerating rates, so we must work to reduce their amplification though the financial system.

LLMs Almost As Good As “Crowd of Competitive Forecasters”

You all know I am a huge fan of both Superforecasting techniques and AI/LLMs.

Approaching Human-Level Forecasting with Language Models (arXiv)

Forecasting future events is important for policy and decision making.

In this work, we study whether language models (LMs) can forecast at the level of competitive human forecasters. Towards this goal, we develop a retrieval-augmented LM system designed to automatically search for relevant information, generate forecasts, and aggregate predictions. To facilitate our study, we collect a large dataset of questions from competitive forecasting platforms. Under a test set published after the knowledge cut-offs of our LMs, we evaluate the end-to-end performance of our system against the aggregates of human forecasts.

On average, the system nears the crowd aggregate of competitive forecasters, and in some settings surpasses it.

Our work suggests that using LMs to forecast the future could provide accurate predictions at scale and help to inform institutional decision making.

How (Over)Confident Are You?

My results:

Mean confidence: 74.20%

Actual percent correct: 66.00%

You want your mean confidence and actual score to be as close as possible.

Mean confidence on correct answers: 77.27%

Mean confidence on incorrect answers: 68.24%

Feel free to share your results.

Three Types Of Bayesians

Bayesian statistics: the three cultures

Subjective Bayesian

A traditional subjective Bayesian will assume a data generating distribution (aka a likelihood when viewed as a function of the parameters). Then, conditional on this assumption, they encode their prior beliefs as a prior distribution over parameters. After this, they turn the crank on posterior inference and don’t look back.

Objective Bayesian

Reference analysis produces objective Bayesian inference, in the sense that inferential statements depend only on the assumed model and the available data, and the prior distribution used to make an inference is least informative in a certain information-theoretic sense. (Berger, Bernardo and Sun)

Pragmatic Bayesian

First, the probability model is joint, which means it includes the prior and the likelihood. Second, it characterizes our inputs as “knowledge” rather than “belief.” Third, it encourages practitioners to evaluate how well a model fits and its predictive consequences, and if there are issues, go back and try again.

The process of Bayesian data analysis can be idealized by dividing it into the following three steps:

Setting up a full probability model—a joint probability distribution for all observable and unobservable quantities in a problem. The model should be consistent with knowledge about the underlying scientific problem and the data collection process.

Conditioning on observed data: calculating and interpreting the appropriate posterior distribution—the conditional probability distribution of the unobserved quantities of ul- timate interest, given the observed data.

Evaluating the fit of the model and the implications of the resulting posterior distribution: how well does the model fit the data, are the substantive conclusions reasonable, and how sensitive are the results to the modeling assumptions in step 1? In response, one can alter or expand the model and repeat the three steps.

Measuring Outrage

The Outrage Heuristic (Sunstein)

Many moral judgments are rooted in the outrage heuristic. In making such judgments about certain personal injury cases, people’s judgments are both predictable and widely shared. With respect to outrage (on a bounded scale of 1 to 6) and punitive intent (also on a bounded scale of 1 to 6), the judgments of one group of six people, or twelve people, nicely predict the judgements of other groups of six people, or twelve people. Moreover, outrage judgments are highly predictive of punitive intentions. Because of their use of the outrage heuristic, people are intuitive retributivists. People care about deterrence, but they do not think in terms of optimal deterrence. Because outrage is category-specific, those who use the outrage heuristic are likely to produce patterns that they would themselves reject, if only they were to see them. Because people are intuitive retributivists, they reject some of the most common and central understandings in economic and utilitarian theory. To the extent that a system of civil penalties or criminal justice depends on the moral psychology of ordinary people, it is likely to operate on the basis of the outrage heuristic, and will, from the utilitarian point of view, end up making serious and systematic errors.

Caitlin Clark Is An Outlier

When constructing models one frequently needs to identify and eliminate outliers so that the model is accurate for the vast majority of cases. Caitlin Clark is an outlier. She’s the sole green dot in the top right quadrant.

Measuring Luck

We Measured "Luck" in the NFL. It’s a BIG deal. (Youtube)

This is a good article to teach junior staff about stochastic, rather than deterministic, outcomes. This article elucidates how chance events, termed "luck," critically influence US football game outcomes and why understanding this stochastic element is essential.

Defining and Quantifying Luck:

Luck in the NFL is operationalized as a stochastic event—random occurrences beyond the control of players and teams. We can categorize these events into four types: Dropped Opponent Interceptions, Dropped Passes by the Opponent, Missed Kicks and Fumble Recoveries.

Given the limited number of possessions in an NFL game (approximately 25 per game compared to 210 in an NBA game), the variance introduced by luck is substantial. In single-elimination postseason games, this variance is even more pronounced. Analysis reveals that a positive luck score correlates with a 69% win probability in postseason games, underscoring the statistical significance of luck in determining game outcomes.

Over a regular season, the law of large numbers suggests that luck should regress to the mean. However, anomalies persist. The 2023 Vikings, for instance, experienced a consistent -10% luck factor per game, accumulating to a significant disadvantage.

Causality

BTW, if you want to see if a research paper shows true causality, this ChatGPT script “"Correlation isn't Causation" - A causal explainer by Ethan Mollick will answer the question for you - just drop in a paper.

Algorithms

A great deal of work in behavioral science emphasizes that statistical predictions often outperform clinical predictions. Formulas tend to do better than people do, and algorithms tend to outperform human beings, including experts.

One reason is that algorithms do not show inconsistency or “noise”; another reason is that they are often free from cognitive biases. These points have broad implications for risk assessment in domains that include health, safety, and the environment. Still, there is evidence that many people distrust algorithms and would prefer a human decisionmaker.

We offer a set of preliminary findings about how a tested population chooses between a human being and an algorithm. In a simple choice between the two across diverse settings, people are about equally divided in their preference. We also find that that a significant number of people are willing to shift in favor of algorithms when they learn something about them, but also that a significant number of people are unmoved by the relevant information. These findings have implications for unruly current findings about “algorithm aversion” and “algorithm appreciation.”